Getty Images

Getty Images

Luigi Mangione is accused of murdering healthcare insurance CEO Brian Thompson

The BBC has complained to Apple after the tech giant's new iPhone feature generated a false headline about a high-profile murder in the United States.

Apple Intelligence, launched in the UK earlier this week, uses artificial intelligence (AI) to summarise and group together notifications.

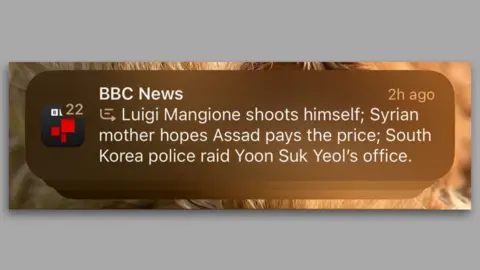

This week, the AI-powered summary falsely made it appear BBC News had published an article claiming Luigi Mangione, the man arrested following the murder of healthcare insurance CEO Brian Thompson in New York, had shot himself. He has not.

A spokesperson from the BBC said the corporation have contacted Apple "to raise this concern and fix the problem".

Apple declined to comment.

A zoomed in iPhone screenshot of the misleading BBC notification

"BBC News is the most trusted news media in the world," the BBC spokesperson added.

"It is essential to us that our audiences can trust any information or journalism published in our name and that includes notifications."

The notification which made a false claim about Mangione was otherwise accurate in its summaries about the overthrow of Bashar al-Assad's regime in Syria and an update on South Korean President Yoon Suk Yeol.

But the BBC does not appear to be the only news publisher which has had headlines misrepresented by Apple's new AI tech.

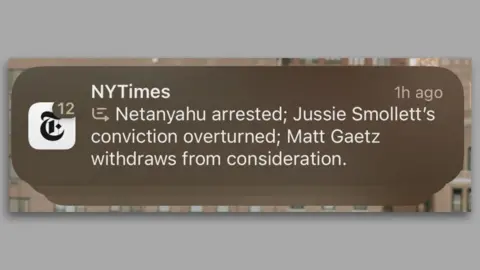

On 21 November, three articles on different topics from the New York Times were grouped together in one notification - with one part reading "Netanyahu arrested", referring to the Israeli prime minister.

It was inaccurately summarising a newspaper report about the International Criminal Court issuing an arrest warrant for Netanyahu, rather than any reporting about him being arrested.

The mistake was highlighted on Bluesky by a journalist with the US investigative journalism website ProPublica.

The BBC has not been able to independently verify the screenshot, and the New York Times did not provide comment to BBC News.

Ken Schwencke

Ken Schwencke

A screenshot of a group notification from the New York Times was also said to be misleading.

'Embarrassing' mistake

Apple says one of the reasons people might like its AI-powered notification summaries is to help reduce the interruptions caused by ongoing notifications, and to allow the user to prioritise more important notices.

It is only available on certain iPhones - those using the iOS 18.1 system version or later on recent devices (all iPhone 16 phones, the 15 Pro, and the 15 Pro Max). It is also available on some iPads and Macs.

Prof Petros Iosifidis, a professor in media policy at City University in London, told BBC News the mistake by Apple "looks embarrassing".

"I can see the pressure getting to the market first, but I am surprised that Apple put their name on such demonstrably half-baked product," he said.

"Yes, potential advantages are there - but the technology is not there yet and there is a real danger of spreading disinformation."

The grouped notifications are marked with a specific icon, and users can report any concerns they have on a notification summary on their devices. Apple has not outlined how many reports it has received.

Apple Intelligence does not just summarise the articles of publishers, and it has been reported the summaries of emails and text messages have occasionally not quite hit the mark.

And this is not the first time a big tech company has discovered AI summaries do not always work.

In May, in what Google described as "isolated examples", its AI Overviews tool for internet searches told some users looking for how to make cheese stick to pizza should consider using "non-toxic glue".

The search engine's AI-generated responses also said geologists recommend humans eat one rock per day.

Movie

Movie 2 months ago

79

2 months ago

79

![Presidents Day Weekend Car Sales [2021 Edition] Presidents Day Weekend Car Sales [2021 Edition]](https://www.findthebestcarprice.com/wp-content/uploads/Presidents-Day-Weekend-car-sales.jpg)

English (United States)

English (United States)